The UX of AI in Games

Experiments and iteration on the path to unlocking new ways to play

Overview

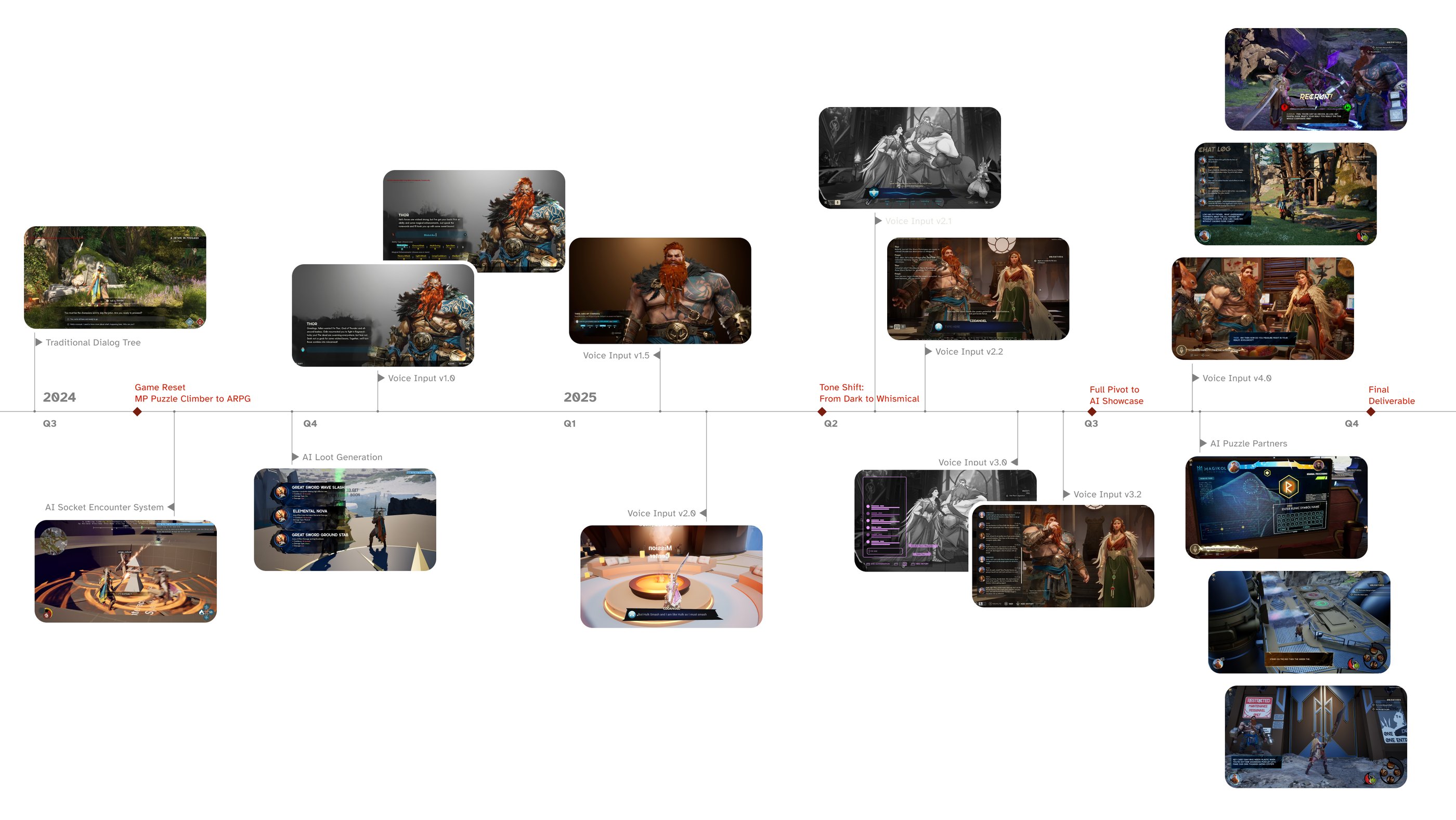

In May of 2024 our studio took a hard pivot. With a foundation of solid traversal and combat mechanics we determined that our biggest differentiating feature would be gameplay that could only be possible with AI. Amazon was developing a number of different approaches to LLM and we were certain we could deliver innovation and AAA production values in the same experience. But we needed to build a lot of technology and experiment like crazy to separate the truth from fantasy.

Role

UX Director with hands-on UX, Visual, Motion Design and prototyping

Rune Words & Rewards

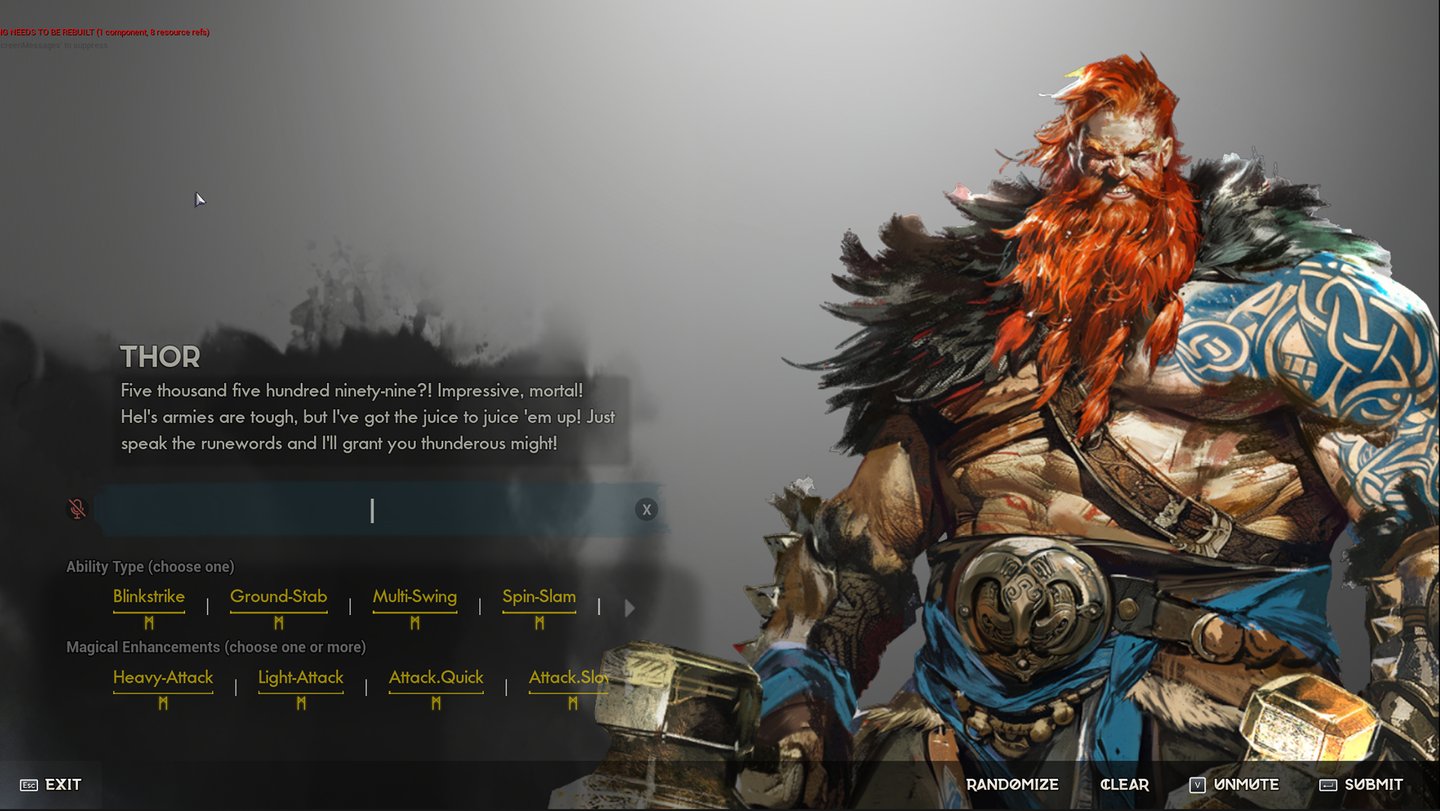

Our first experiments centered on the promise of natural conversation with a benevolent game master. The AI would understand the rules of the game and the player would shape aspects of the environment through dialog. But to constrain a nearly infinite number of dimensions we created discoverable rune words that became the atoms from which the AI could create.

Goals

Strong emotional connections and story engagement with NPCs that the player can improvise with in humorous and unexpected ways.

A core backbone of speech and intent processing that can also drive gestures, facial expressions, and voice acting.

Game mechanics that highlight what LLMs are actually good at.

A showcase of different game modalities wrapped in a AAA-quality game experience.

Tools

Team

Timeline

Figma, Creative Suite, Unreal 5.3

1 Visual Designer, 1 Motion Designer, 1 FX Artist, 1 Design Technologists, 1 UI Engineer, 1 UX Producer

+

2 ML Scientists, 2 Gameplay Programmers, 2 Narrative Designers, 2 Game Systems Designers, 3 Level Designers

+

Art, Game Design, & Engineering Teams

12 months total

in 4 acts (~3mo each)

Cognitive Overload

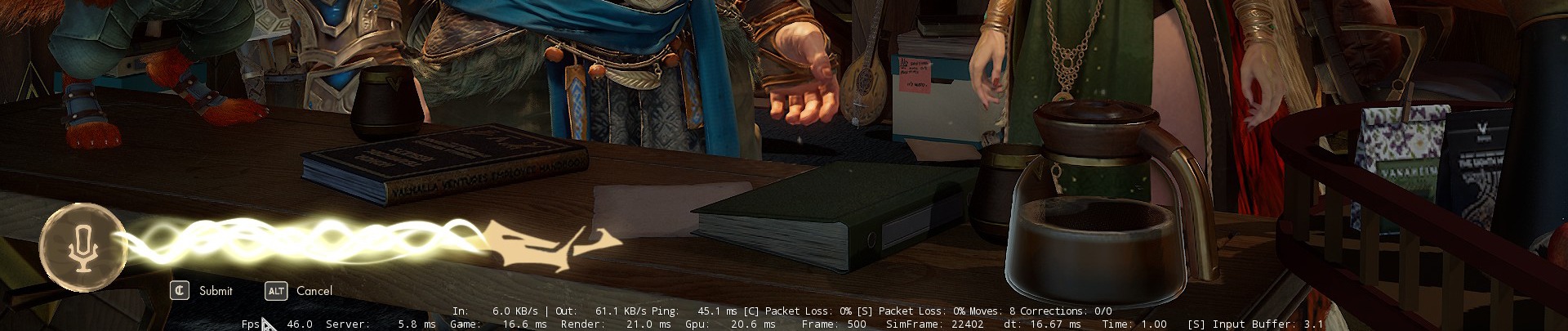

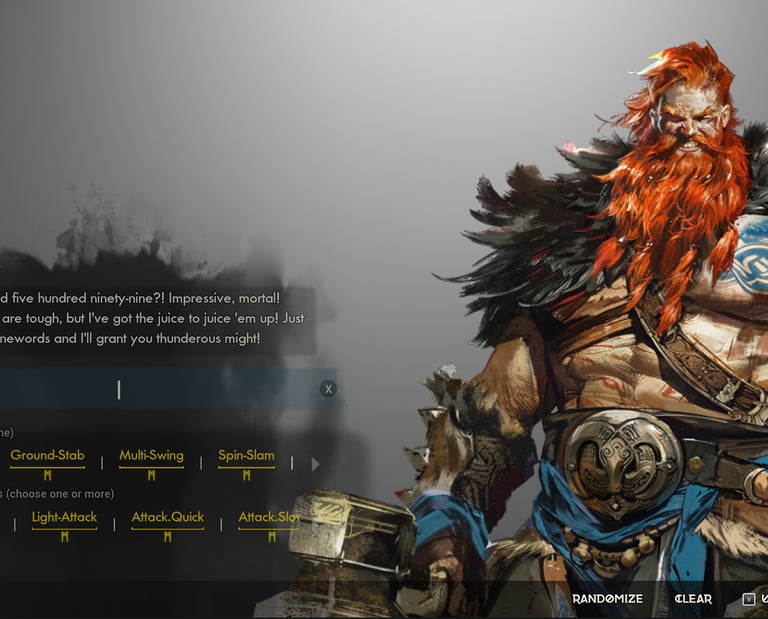

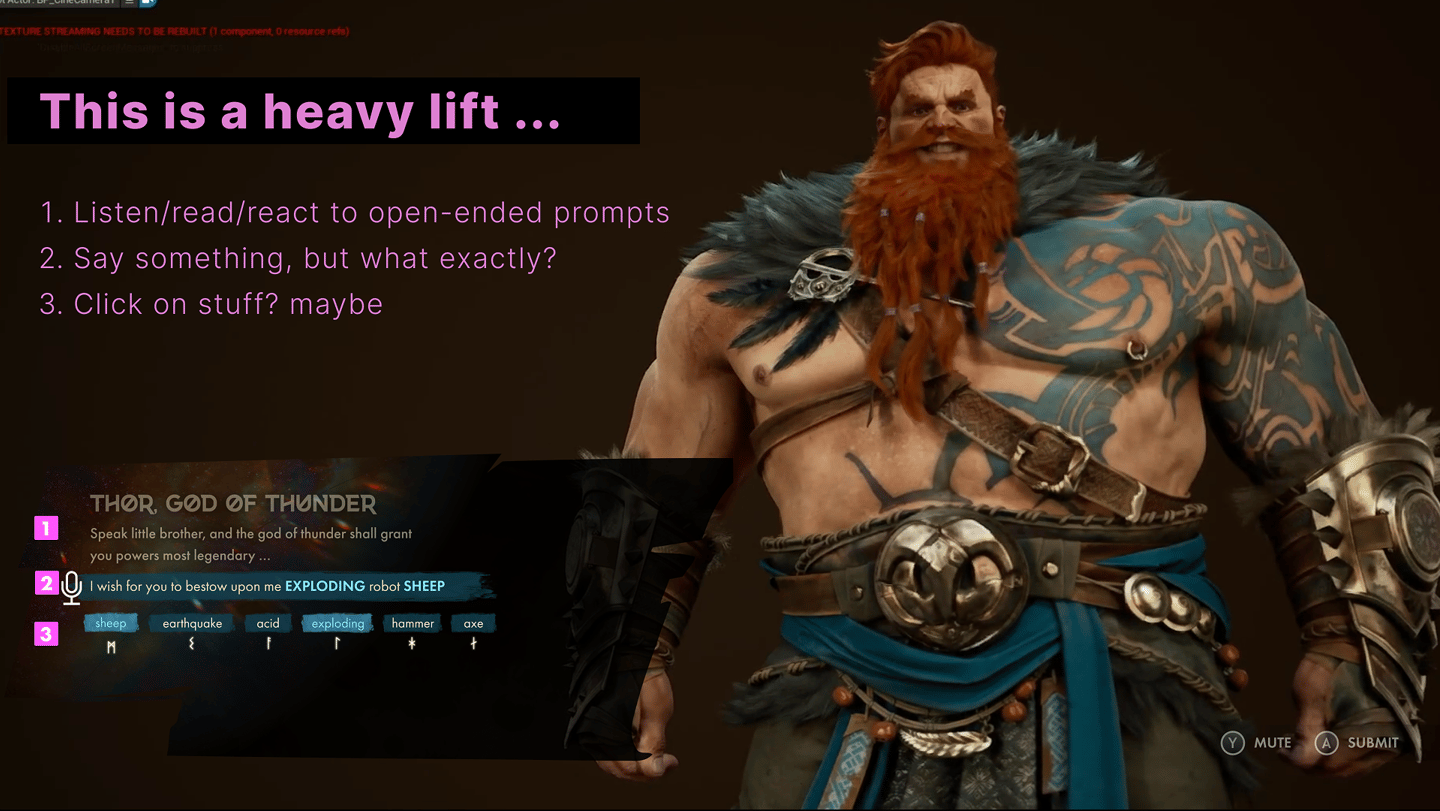

We built a multi-modal input system for voice, mouse, keyboard, and gamepad, allowing players to seamlessly assemble sentences by speaking, typing, or selecting rune words.

It was powerful but turned creativity into a chore with too many prompts competing for attention.

The Approach

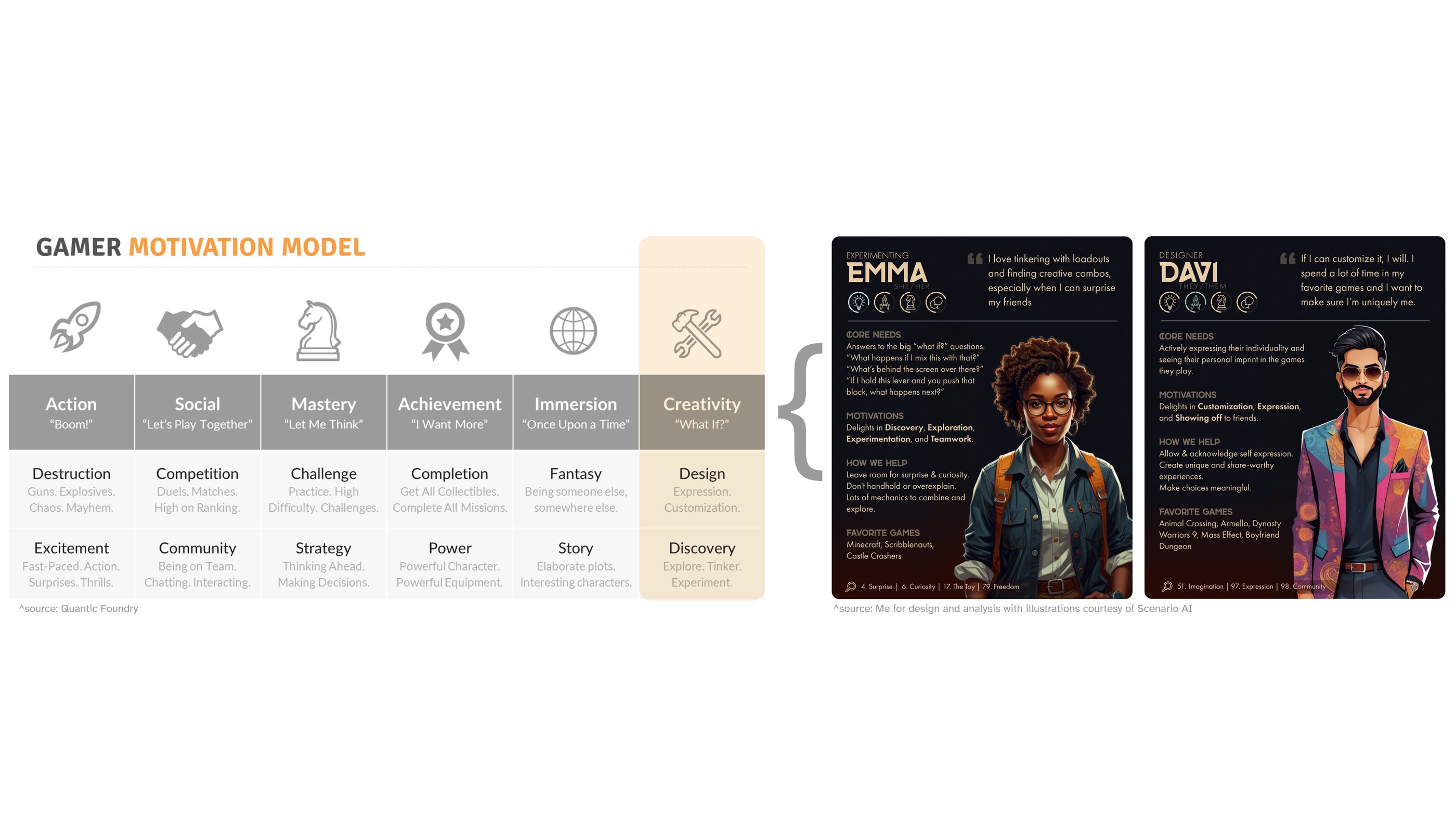

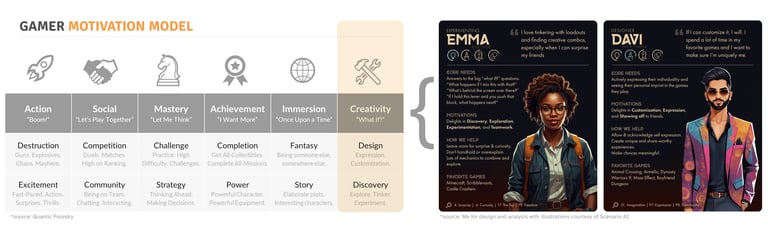

Our very first step was to determine what type of players would be most excited about AI-driven gaming. We worked with the Quantic Foundry gamer motivations framework and determined that the strengths of AI would naturally align with the Creator/Builder/Experimenter as opposed to the more typical Action or Mastery player. This helped focus our own experiments moving forward.

Version 1.0

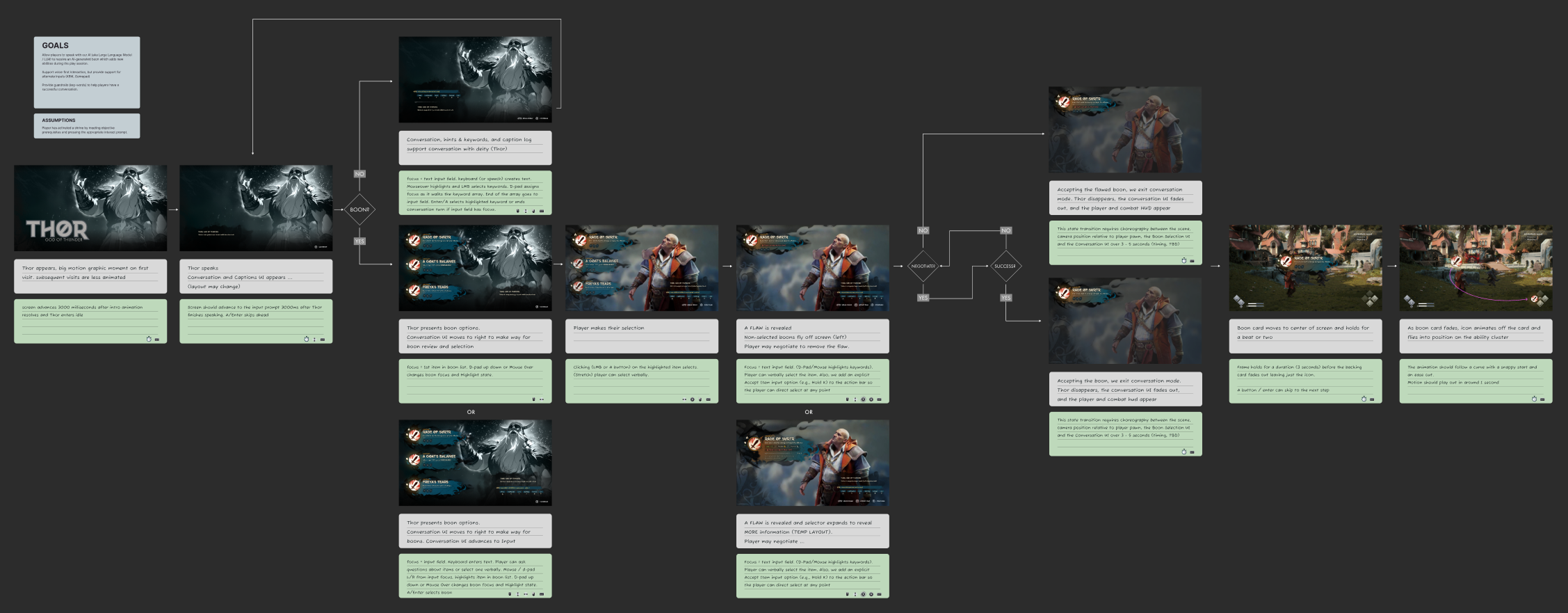

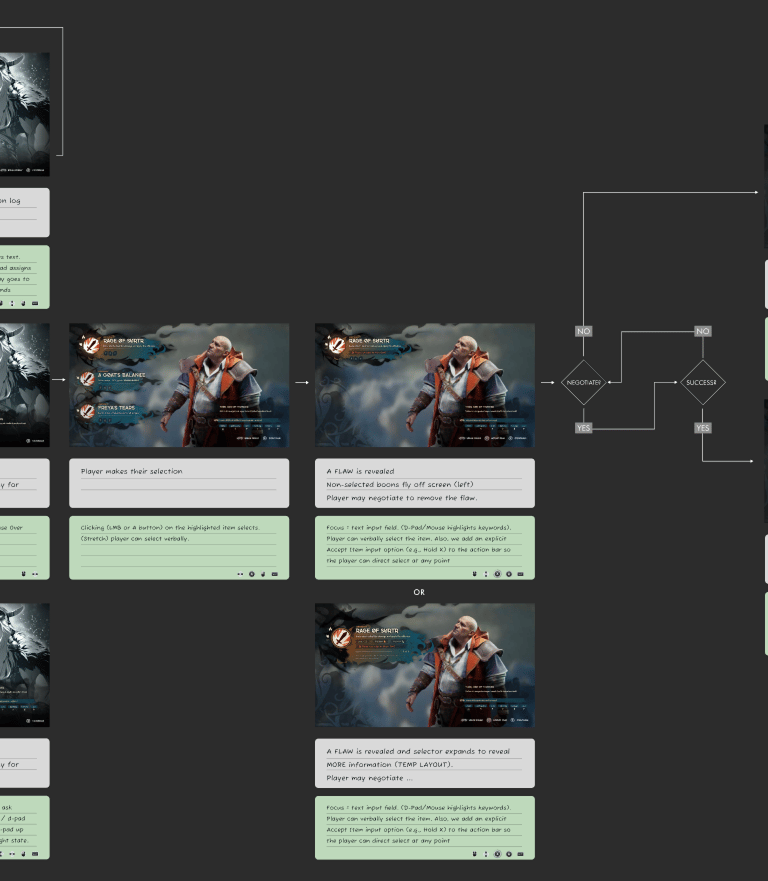

The Burden of Choice

And as we stripped away layers of complexity in the interaction model (fewer things to click on), we introduced the ability cards (more things to scrutinize).

The gameplay here was to select an LLM-generated ability and then convince the NPC to remove any resulting quirks or flaws using conversational tactics like flattery or threats. But this, too, was challenging for players to negotiate.

Maybe Polish will Help?

At this point, the art team was working on a stunning showcase of LLM driven voice acting, facial animation, and gesture. So I built a quick North Star video on top of the animation and VO work to prove out if this whole approach might deliver something fun or at least emotionally resonant assuming we could tighten up the delay between roundtrips.

But we Needed a Reset

In just 3 months, we delivered strong foundations for speech processing, intent mapping, narrative development, character acting, and even AI generated game objects. But at the end of this milestone we realized how far we'd drifted away from our tenets of immersion, clarity, and spectacle.

Key Challenges

Lessons Learned

Things to Keep

Players ignored conversation in favor of clicking keywords.

Random rewards weren't great.

The whole sequence took "forever".

Too much uncertainty about what to say, how to ask for something good.

Strong character presence really delivers in first person.

At first, the team had reservations about voice interaction due to system latency. A core pillar of gameplay is reaction time so the earliest trials focused on systems where we could mask delays. Things like generative level construction, player driven narrative development, generative loot tables ... anything BUT voice input and conversation.

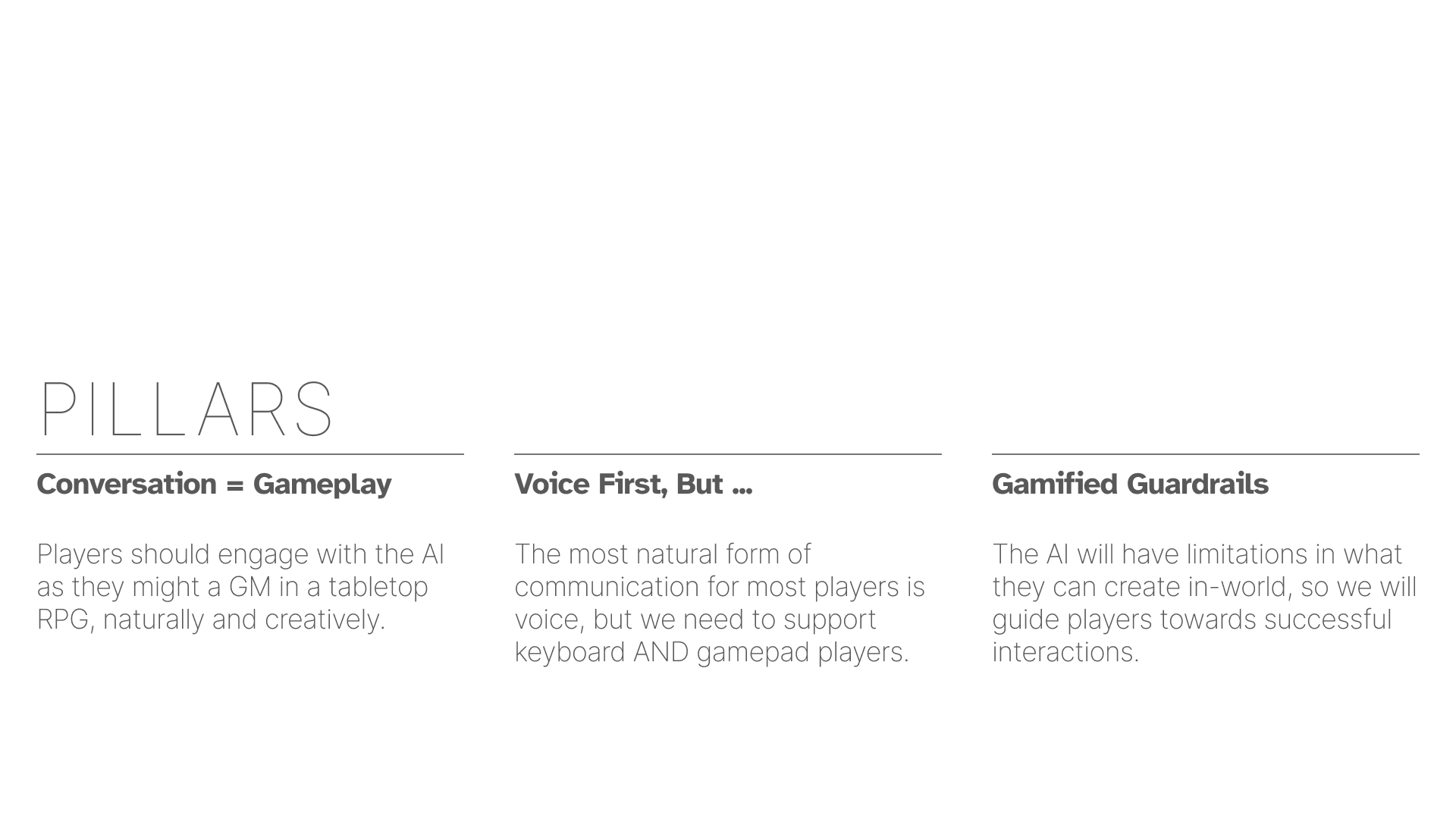

And while there were nuggets of potential, the vast majority of these systems didn't play well or didn't feel any different from large combinatoric procedurally generated experiences. With the developing expectation of integrating Alexa's core technology alongside eye-catching demonstrations of LLM capabilities in cut scenes powered by the art team, we quickly decided to invest in conversation. In fact, we started asking "what if conversation WAS the gameplay."

So for 11 months we focused on turning conversation into gameplay. We tried a lot of different models. The important thing was to stand up as many experiments in-world as possible, because context is everything.

Set players up for success.

Give them choices that matter.

Open-ended turns are too much.

uncertainty about what to say, how to ask for something good

Simplify the input model. Power is inversely proportionate to ease of use.

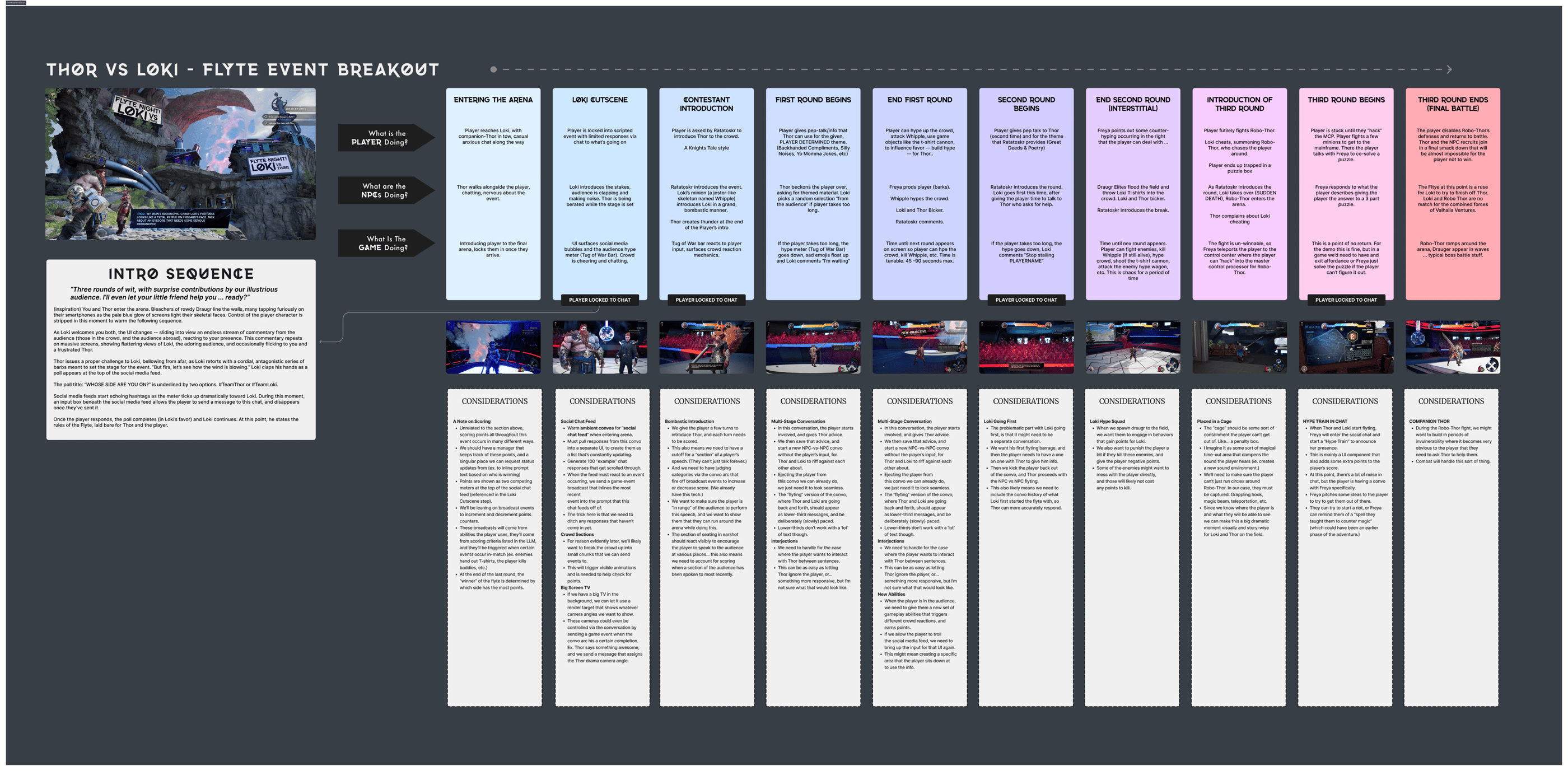

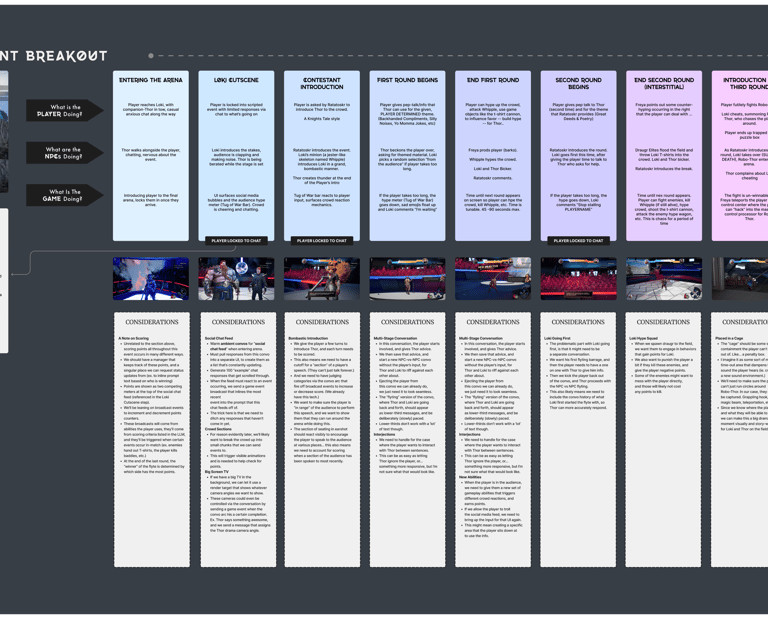

The Cinematic Stage

To truly showcase the characters, we needed an input model that was both characterful and cinematic, streamlined without being plain. To the left is a 3.5 minute narrated walkthrough that I put together to get the team thinking in a new direction.

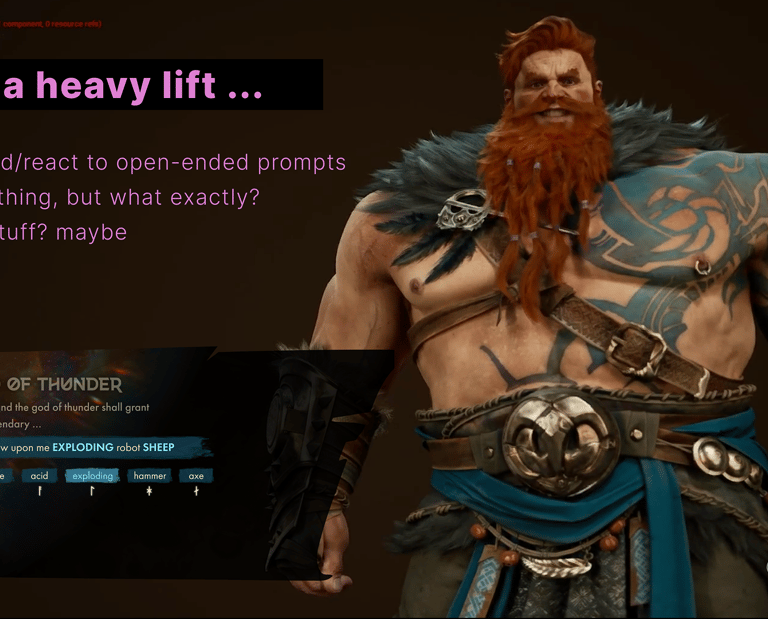

Character First

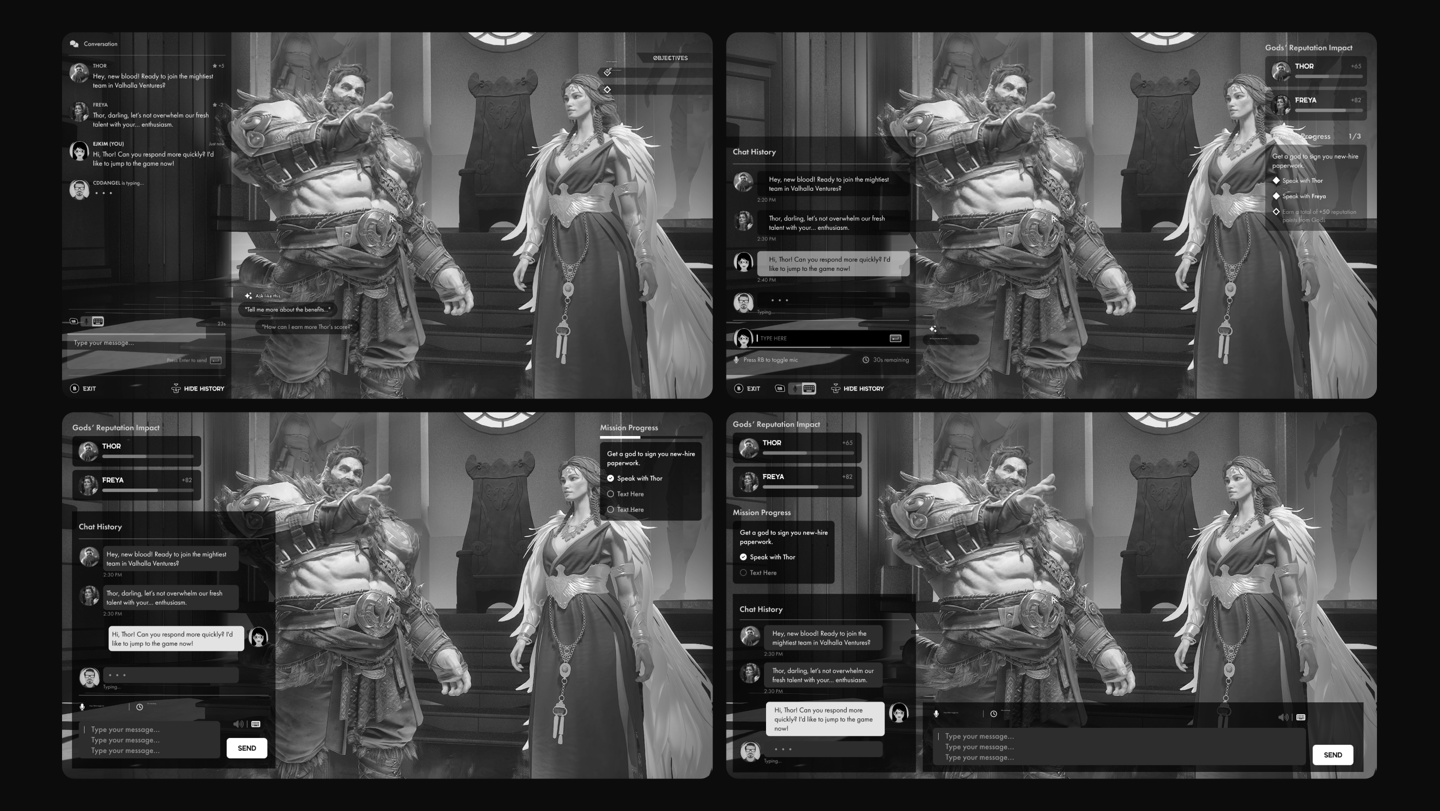

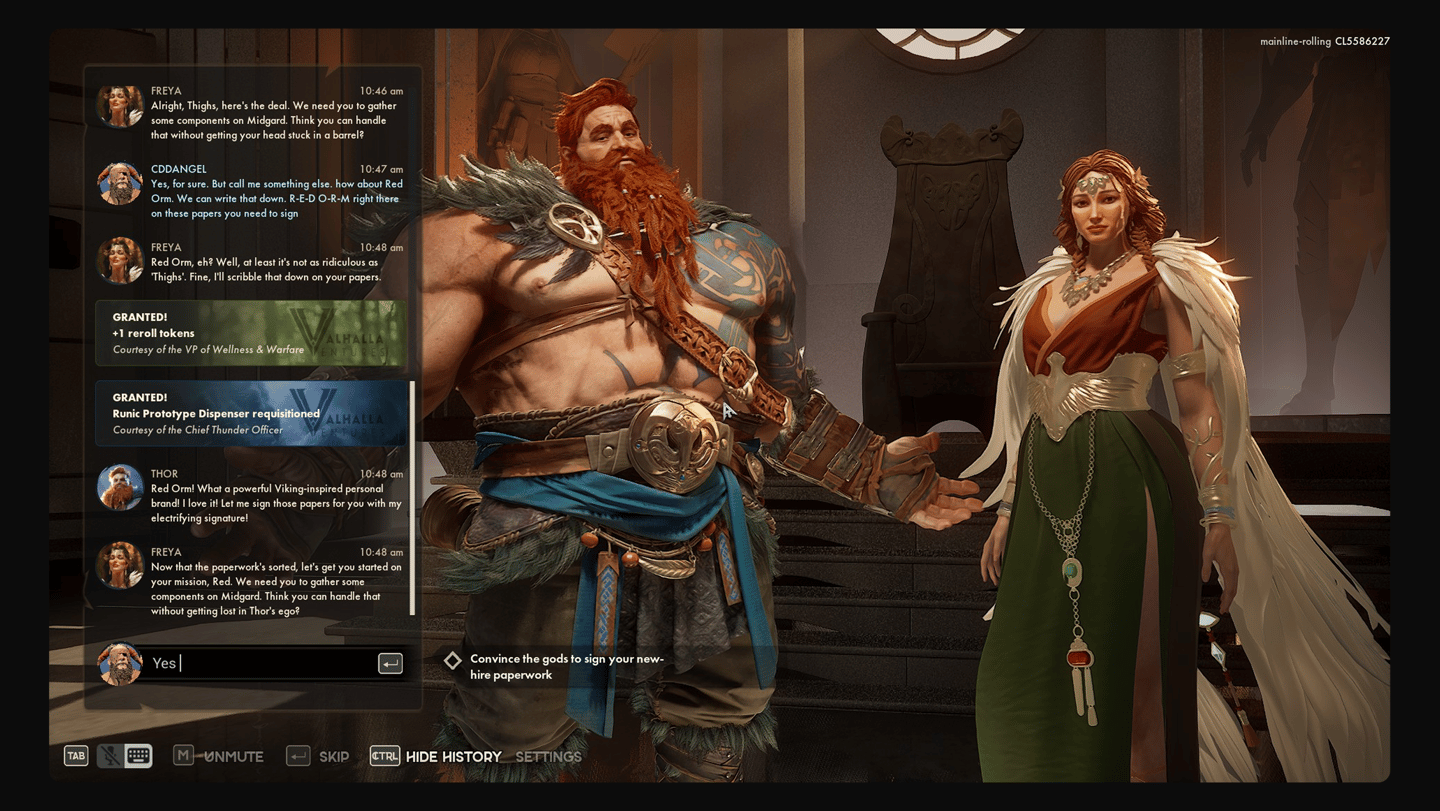

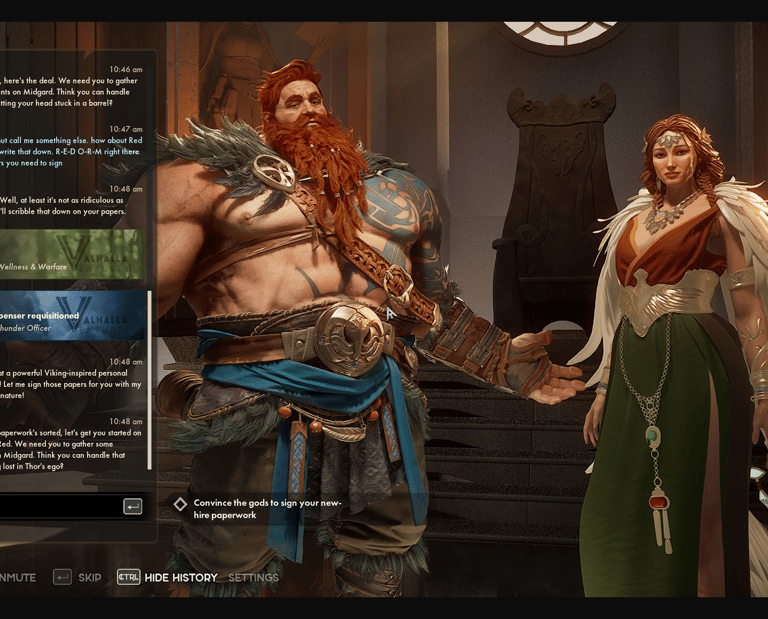

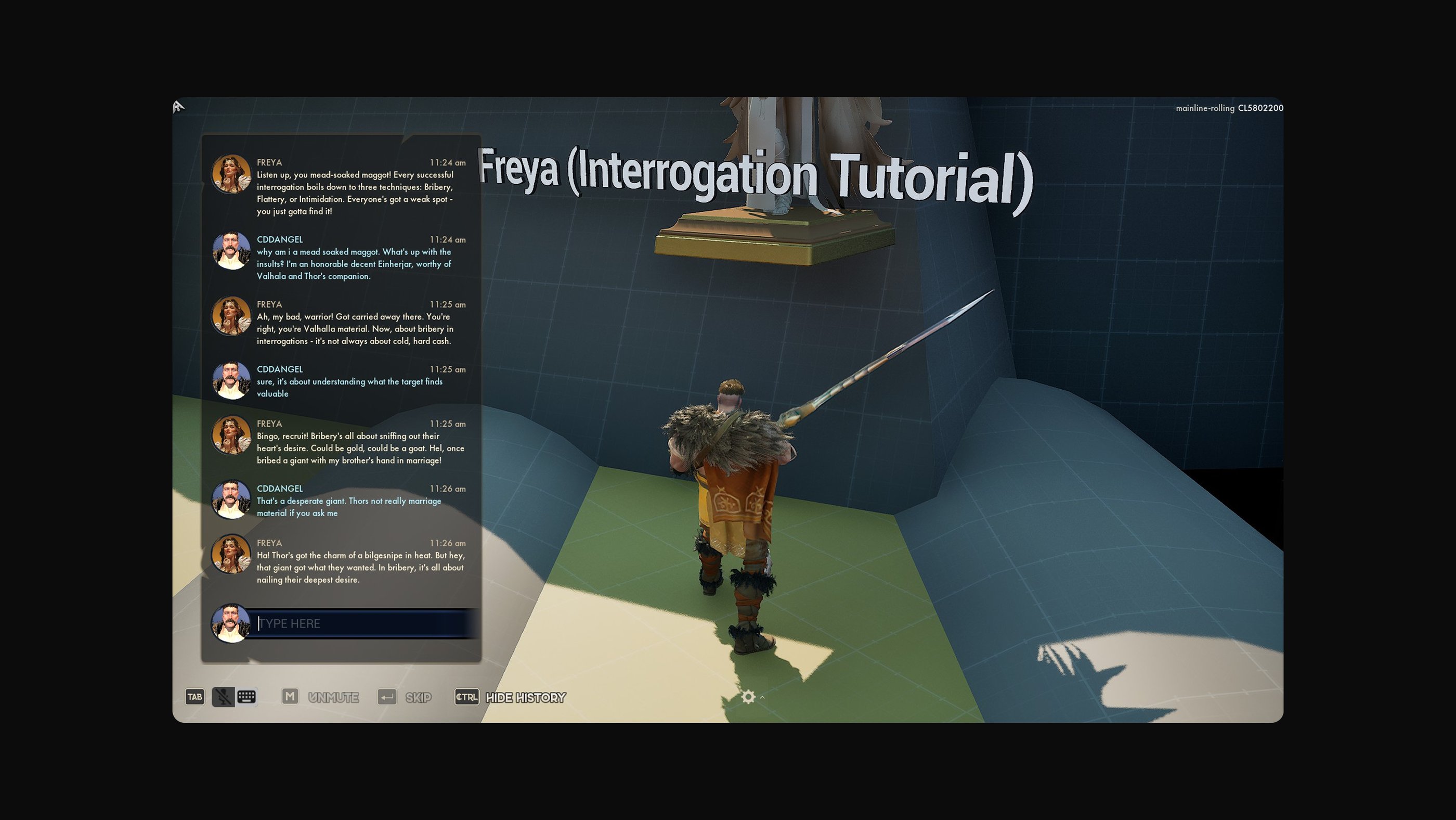

More real estate for the NPC, a streamlined region for voice or keyboard input. No more rune-words, much less clutter. We're making big strides in the clear interaction window department.

Version 2.0

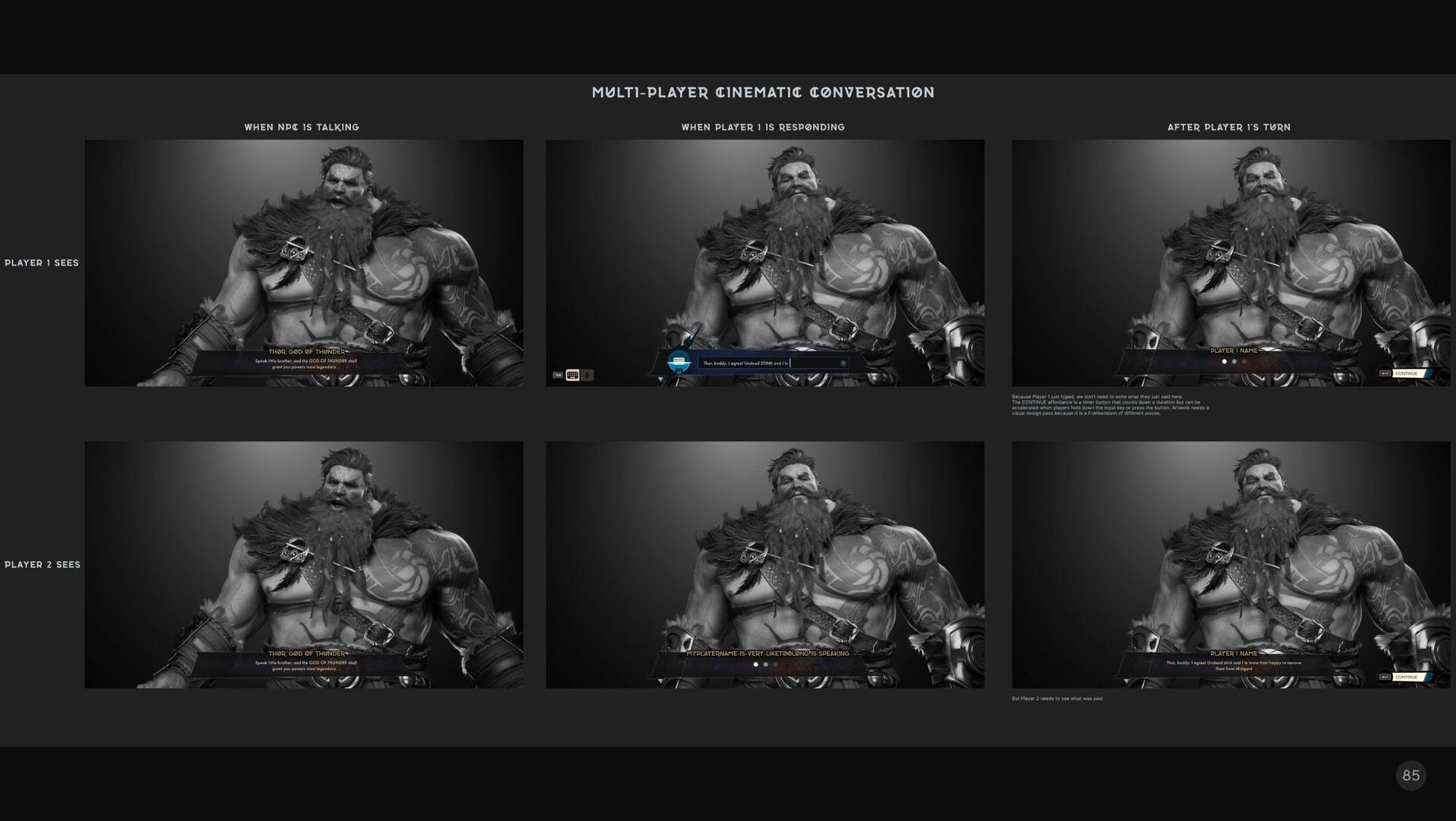

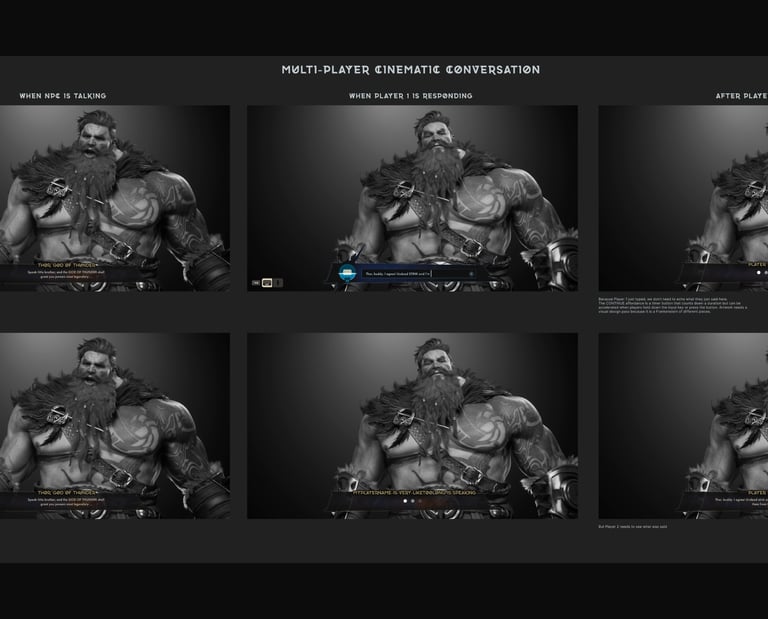

But Multi-Player, too

At this time we were still a multi-player game, which means you could have two people talking to an NPC (or waiting to talk as the case might be). We didn't want to show players in these conversations so we were getting into some tricky territory.

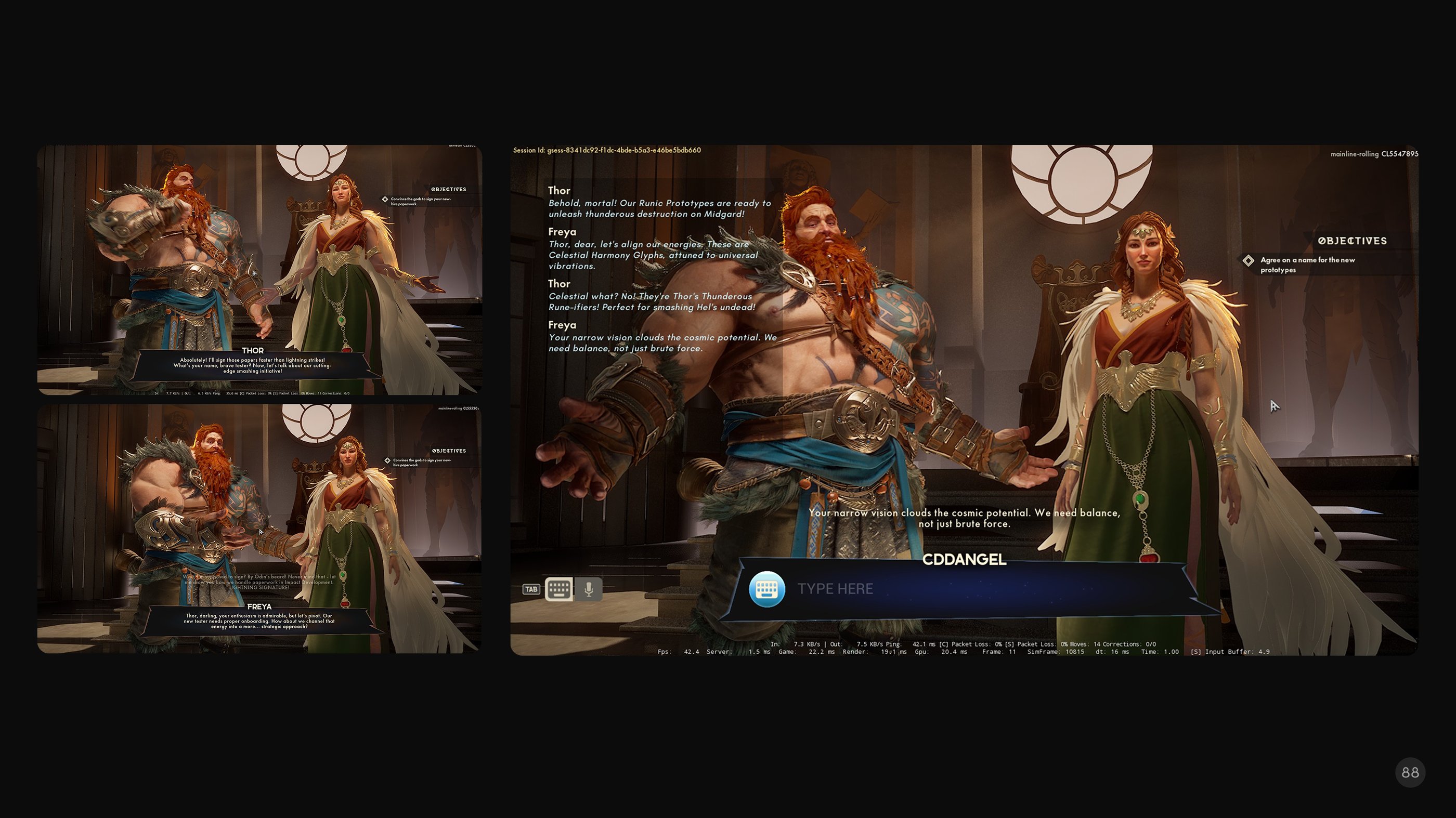

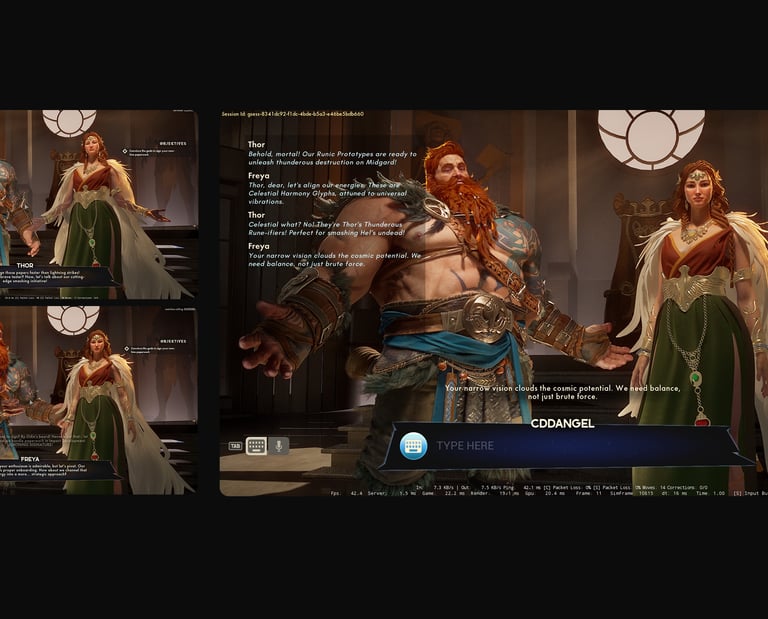

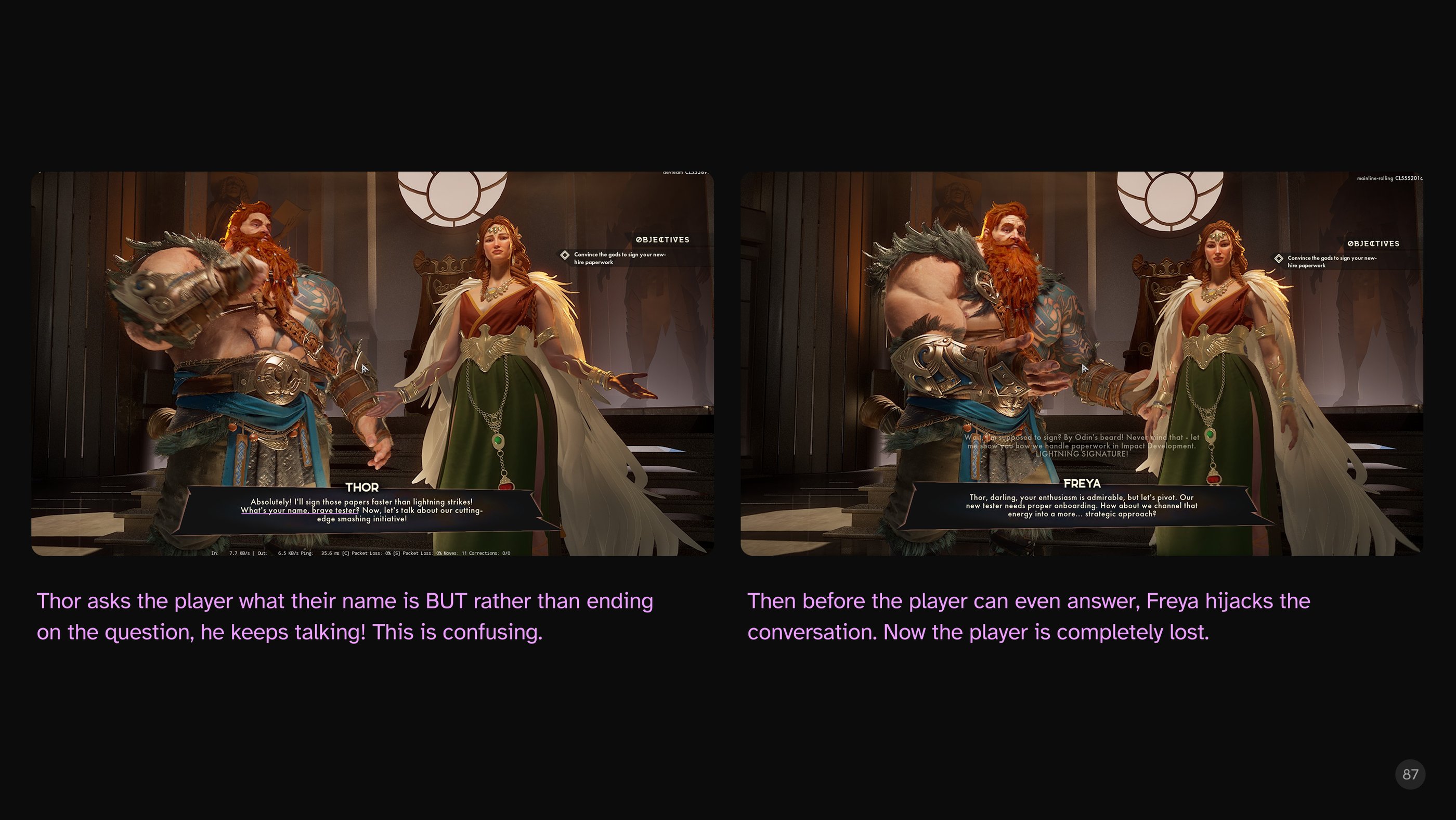

And Multiple NPCs

To showcase the breadth of personalities in our game we chose to add an NPC into the fray. This sounded fantastic in theory, but presented an array of wrinkles. NPCs would often interject and bury any prompt that the player might respond to with rhetorical questions that didn't require player input.

For the Record

Playtesters kept getting lost in all the witty banter so we tried adding a conversation history for players to reference if they needed. But they kept the log up. All the time. I monitored scores of game sessions and as soon as conversation history was discovered players left it up. So much for cinematic minimalism.

Key Challenges

Lessons Learned

Things to Keep

NPCs were bad scene partners, stepping on each others lines and leaving players confused.

Turn-taking with other players was wholly unsatisfying (not surprising).

Players kept the conversation log up the whole time, creating a problem of divided attention.

Add reputation toast, conversation objectives, input prompts for console players, it's amazing how fast something clean becomes messy.

Conversation history and the multi-sensory feedback are essential.

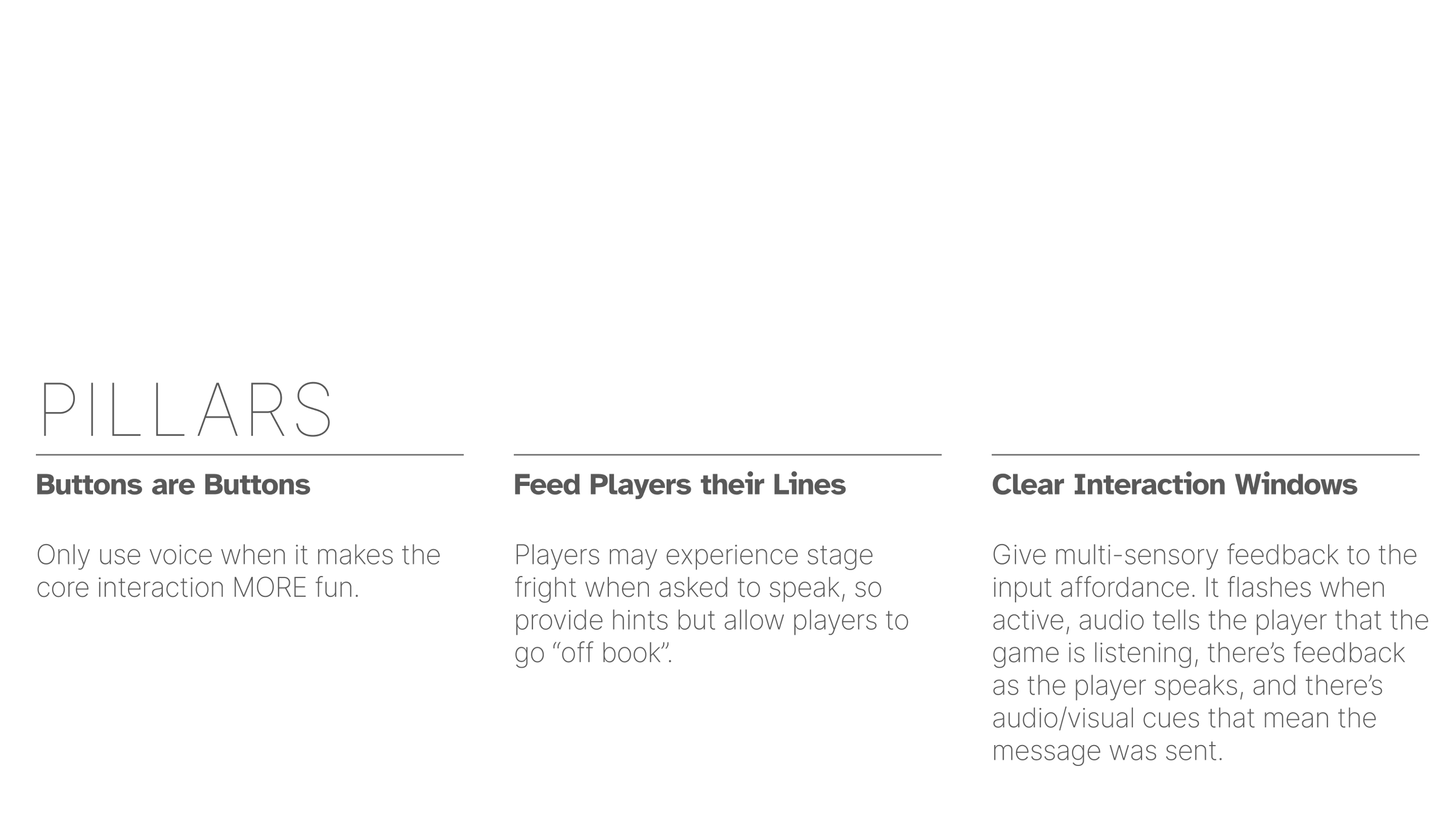

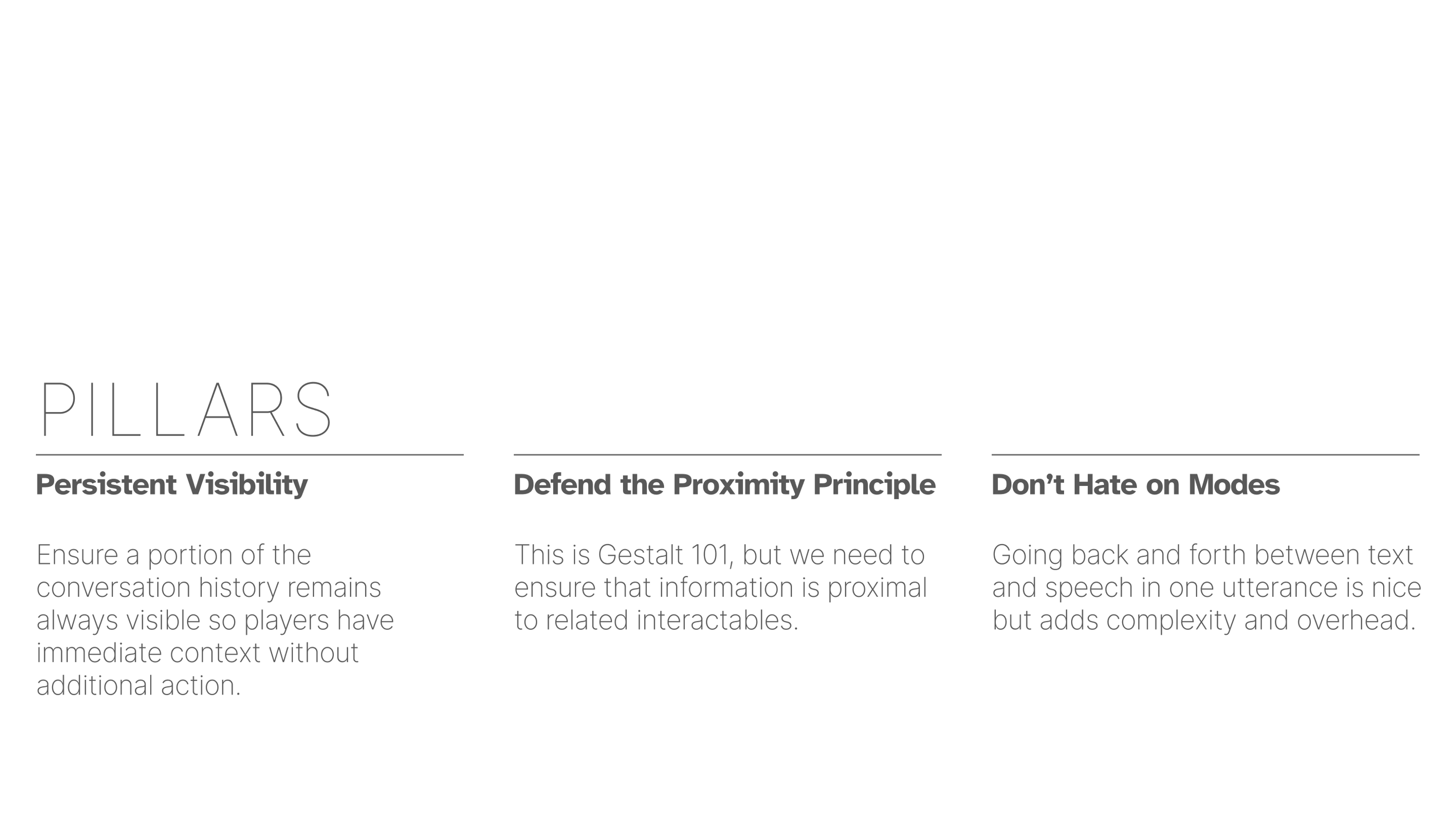

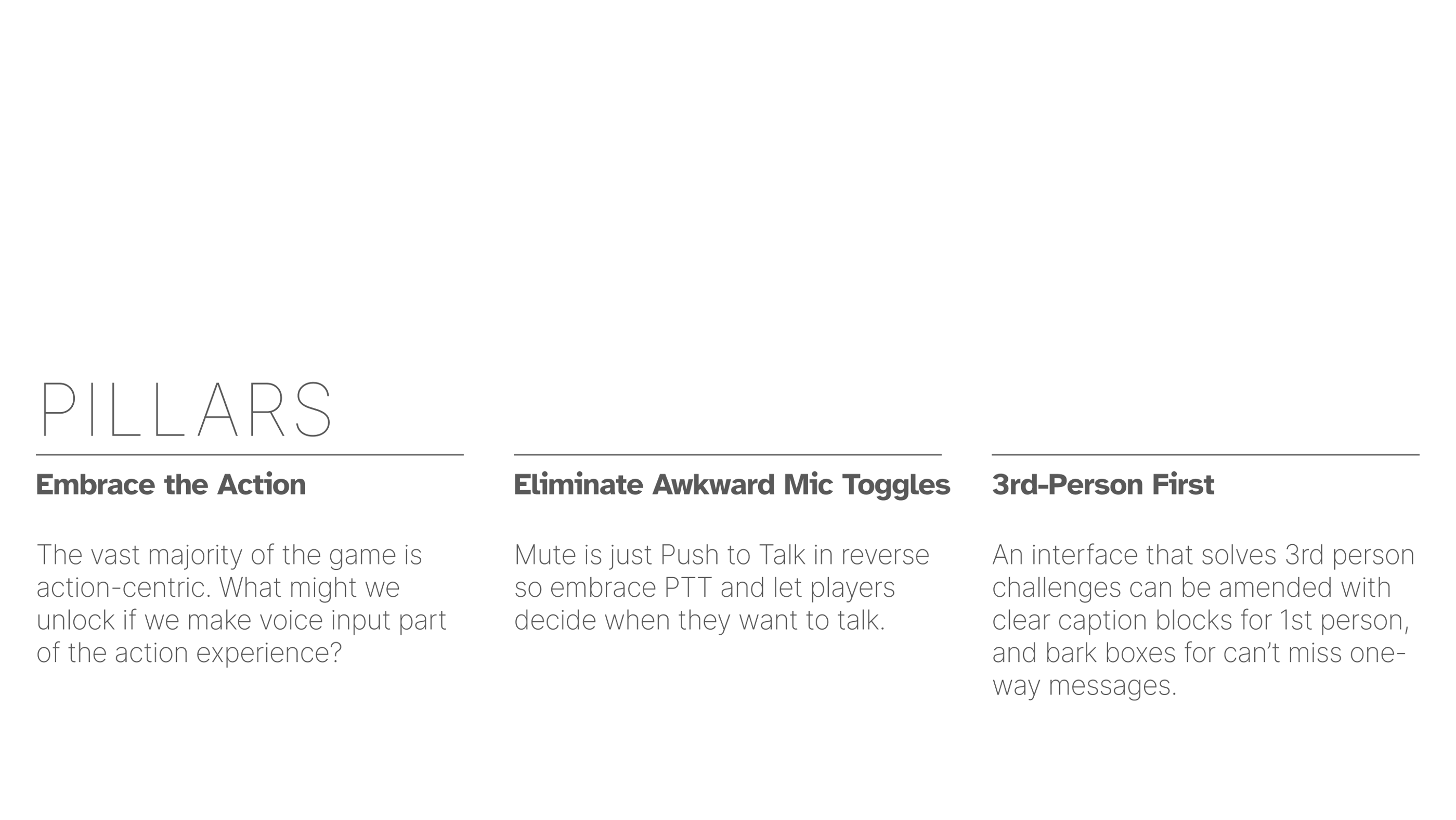

With lessons in hand, we added new pillars and continued our exploration.

Go back to first principles and co-located related information. (rule of proximity)

Need more guardrails in the statemachine to ensure collaboration and turn-taking with players.

Need more 1x1 interactions before adding a second party.

Slack Attack

Nobody on the team asked for this update, and playtest feedback from the current design was fine but it bothered me having so many places to scan for one task. And with the world setting evolving towards a quirky office-comedy vibe I asked "what if the Norse pantheon had their own version of slack"?

Experiment, Just to be Certain

And to really explore this "slackification" notion, I asked one of the designers on my team to iterate on an app-like approach to conversation. This is one of those quick exercises you do as a sanity check. Diverge, converge. Mild to wild.

Version 3.0

Crisp & Clean

But in this case my first instinct was better. Everything is in one place but totally collapsible if the player wants a streamlined view. Rewards inline, conversation objectives right next to the input affordance.

But what Happens in 3rd Person?

The team had experimented with 3rd person conversation modes earlier, but the lag time was too bad. Until it wasn't. So now we have an issue: Outside of immersive first-person settings this arrangement felt very boxy and institutional, especially when staring at the player's back. The hierarchy totally changed.

What Options do we Have?

Turning on all the normal HUD elements alongside the conversation window reveals the limitations of this slack approach. Is there anything from previous milestones we can revive? Maybe if this was an MMO we could make this work, but I know we can do better.

We will do better.

Key Challenges

Lessons Learned

Things to Keep

With more gameplay opportunities for conversation showing up outside of first-person, the model simply needs re-evaluated.

We have a solid approach for first person, but I believe it's time to take a third-person first approach and see how that plays out.

New lessons beget new pillars. And away we go.

While first person showcases characters and high quality art, the payoff of this kind of conversation play is subtle.

Revisiting Old Experiments

Rewind a few months back. This cast-off exploration for a mechanic centered around accumulating a resource that allows the player to make a plea for a divine favor got me thinking ... what if we created a companion widget that would react to conversation states, allowing the player the freedom to talk when they choose?

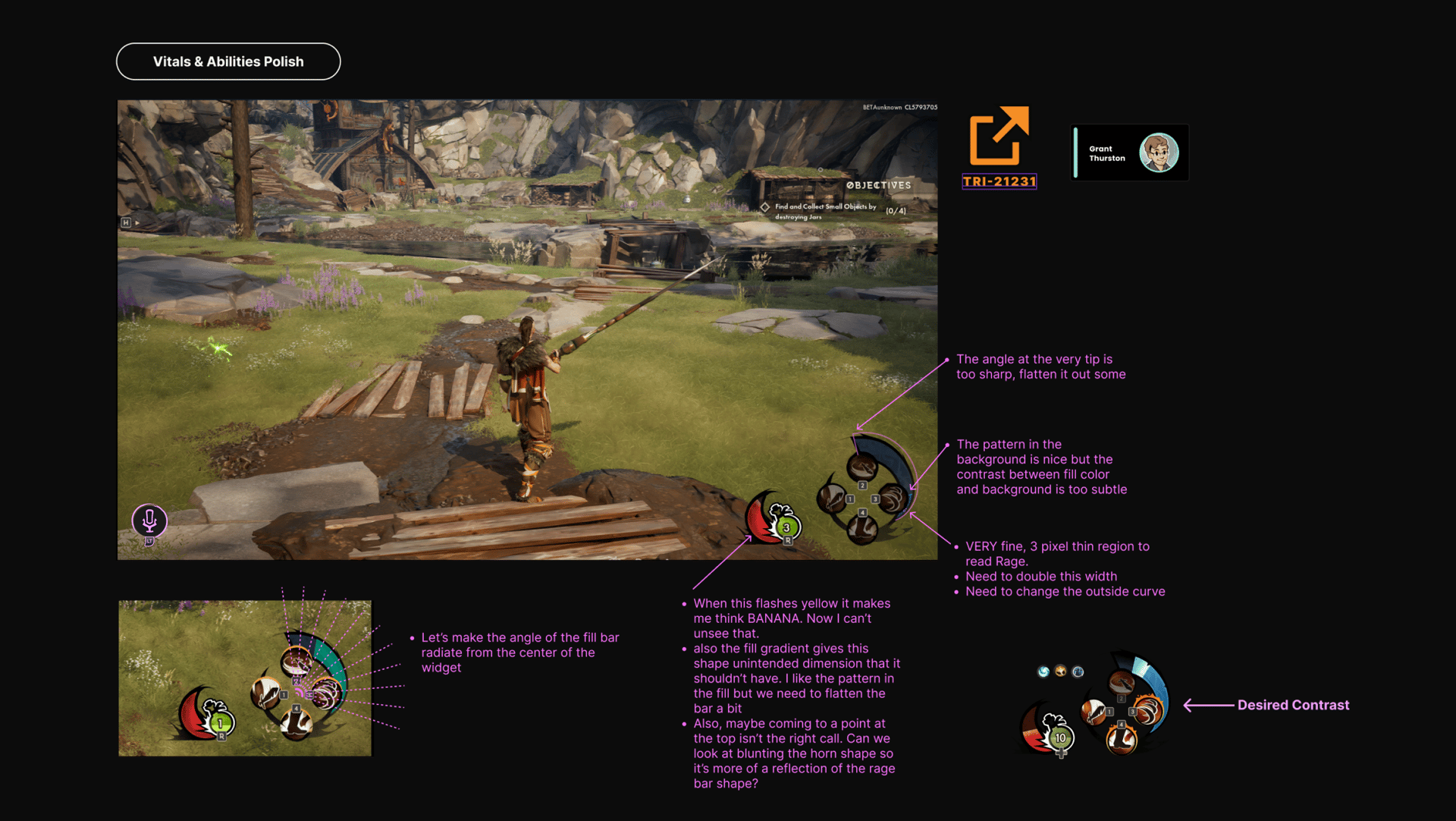

Refactoring the HUD

Adding a companion/push to talk affordance in the lower left corner meant sliding vitals, consumables, and affixes to the right but that was actually an improvement long overdue.

Version 4.0

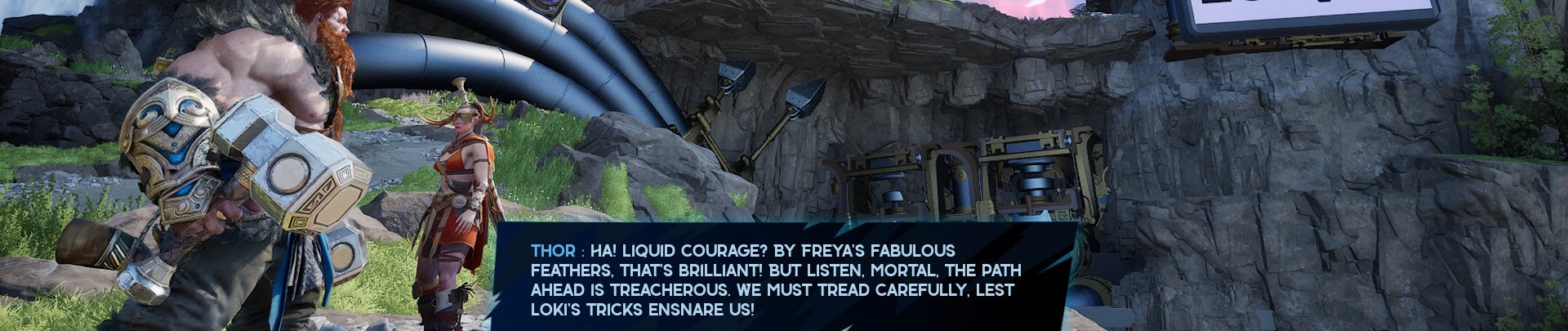

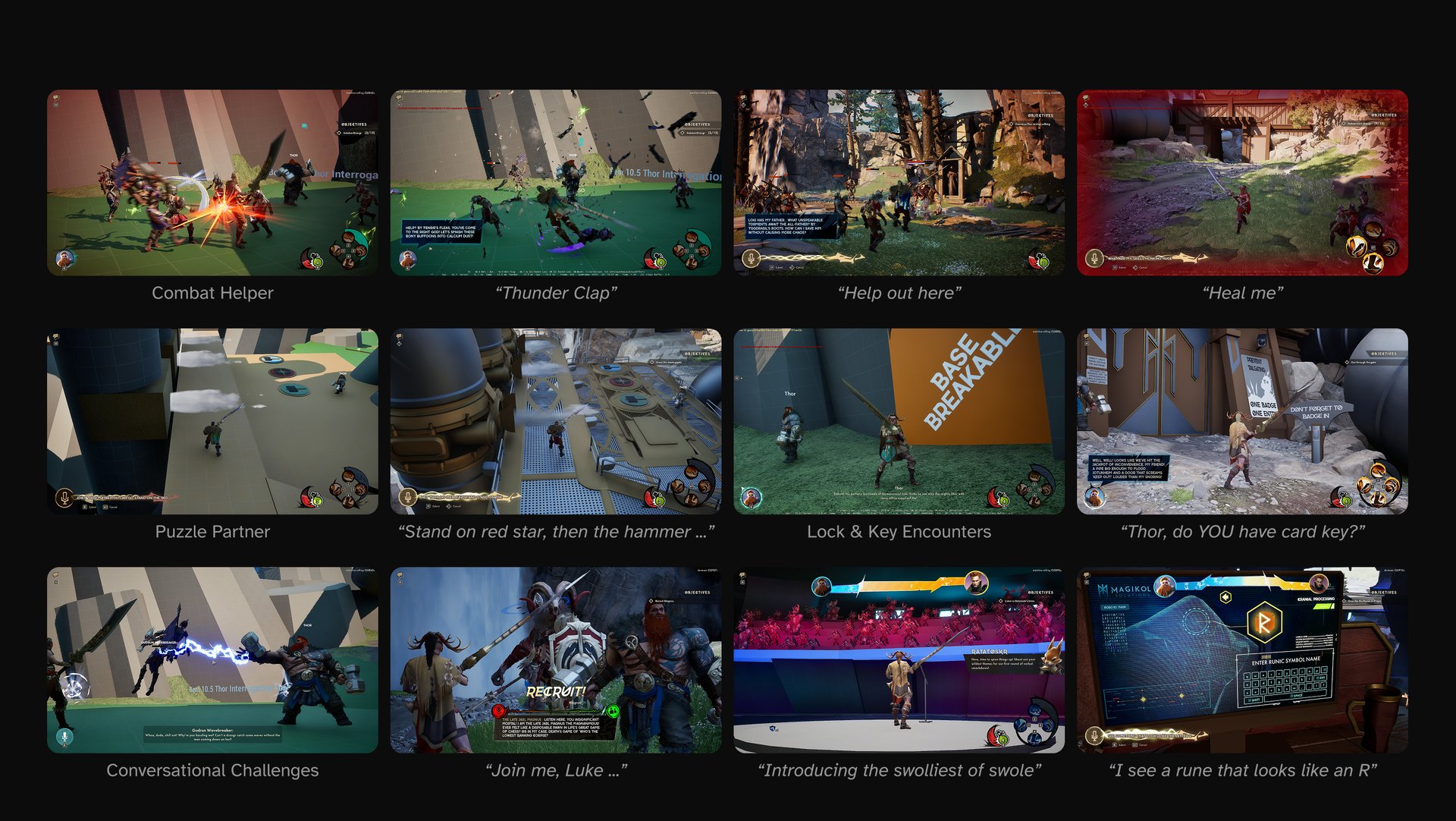

A Whole New Array of Experiences

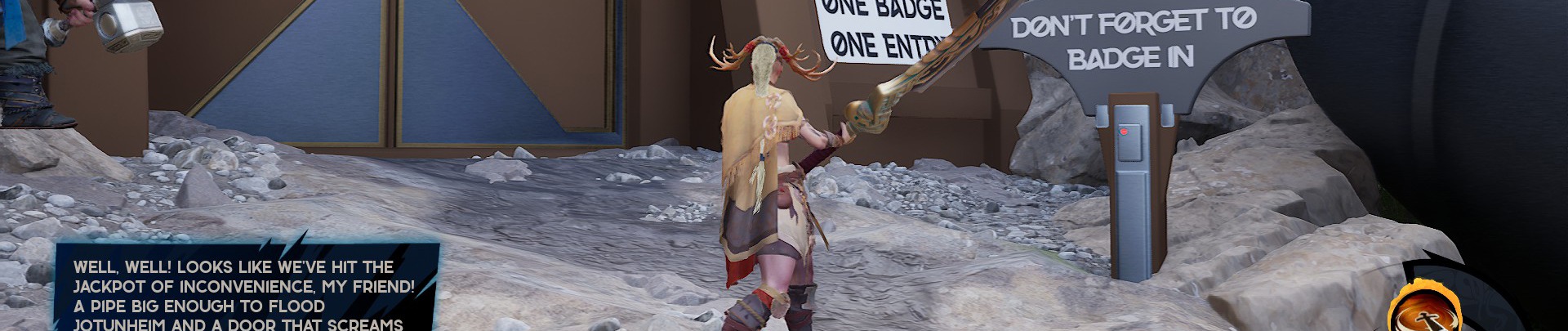

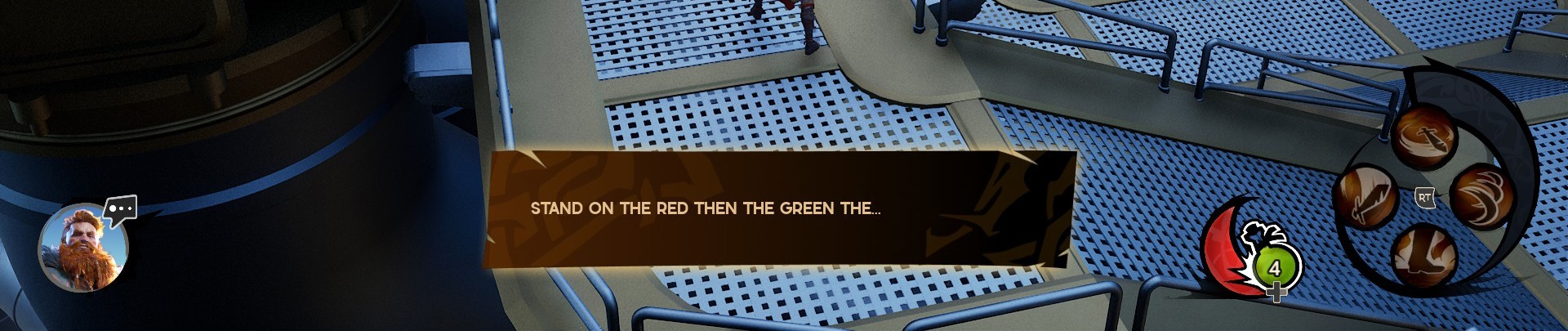

That simple change unlocked a deluge of possibilities. The most notable being companion play where the player and AI are separated physically. What if the player can now describe a puzzle to the AI and the AI can report back the solution and vice versa? This opened up puzzle designs and mini-games like "recruiting" where those early conversational tactics experiments suddenly found an application.

A Rich Toolset = Rich Gameplay

The flexibility of the UI library supported a wealth of new gameplay ideas, allowing the team to craft encounters where conversation (voice input) really was gameplay and not just a vehicle for narrative.

Key Challenges

Lessons Learned

Things to Keep

The remaining issues at this point were mostly technical. Server round trips varied between 1 to 12 seconds, the quality of input from the microphone was too hard to debug, the player was misunderstood about 20% of the time. But this was at least a year out from public release.

AI as companion was much more compelling than AI as open-ended improvisation partner. The narrative opportunities are vast but that can't be the whole game or else it becomes a very niche experience.

A brave new direction opens up a host of gameplay options in the final milestone.

Building experiences ahead of the technology required to deliver at quality is always difficult but especially so in this case. It was difficult to know if the design wasn't working or the tech just wasn't ready yet. The best antidote to this kind of uncertainty is quick Northstar explorations. Every time I made a prototype or coordinated with art on a video we made big changes but always for the better.

The Proof

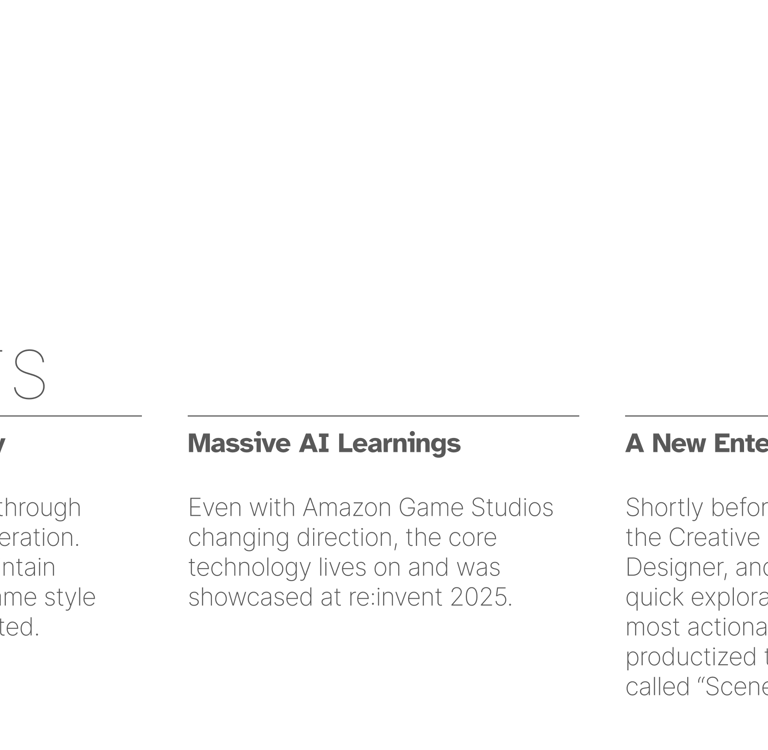

A talking squirrel (Ratatoskr) shows off AI-driven dialog, lip-synch, facial animation, gestures, caption block, voice input, and speech to text.

Scene Partner: 007

To further demonstrate the utility of everything that was built for Project Trident, the Creative Director and I (with input from our lead Systems Designer and Studio Manager) put together in just 2 days a concept walkthrough exploring how we could easily create a platform for connected experiences. I called this "Scene Partner" as it would allow fans of various properties to interact with any AI agents using voice, touch, or controller on any screen.

Scene Partner: Lara Croft

Neither of these game concepts are about controlling an avatar on screen. It's all about interacting with beloved characters through conversation and collaborative puzzle solving.

Note: We used AI for animated storyboards and voice overs. I did all the motion graphics, overlays, simulated gameplay, and final render. All in under 2 days each.

Version 5.0

But wait, there's more ...

note: what follows are unsolicited, speculative works and do not reflect current or planned development for Amazon. I include them to demonstrate that the results of the preceding work was leading towards a platform play that resulted from a grounded design-thinking framework.